Welcome to CARLA’s documentation!¶

CARLA is a python library to benchmark counterfactual explanation and recourse models. It comes out-of-the box with commonly used datasets and various machine learning models. Designed with extensibility in mind: Easily include your own counterfactual methods, new machine learning models or other datasets.

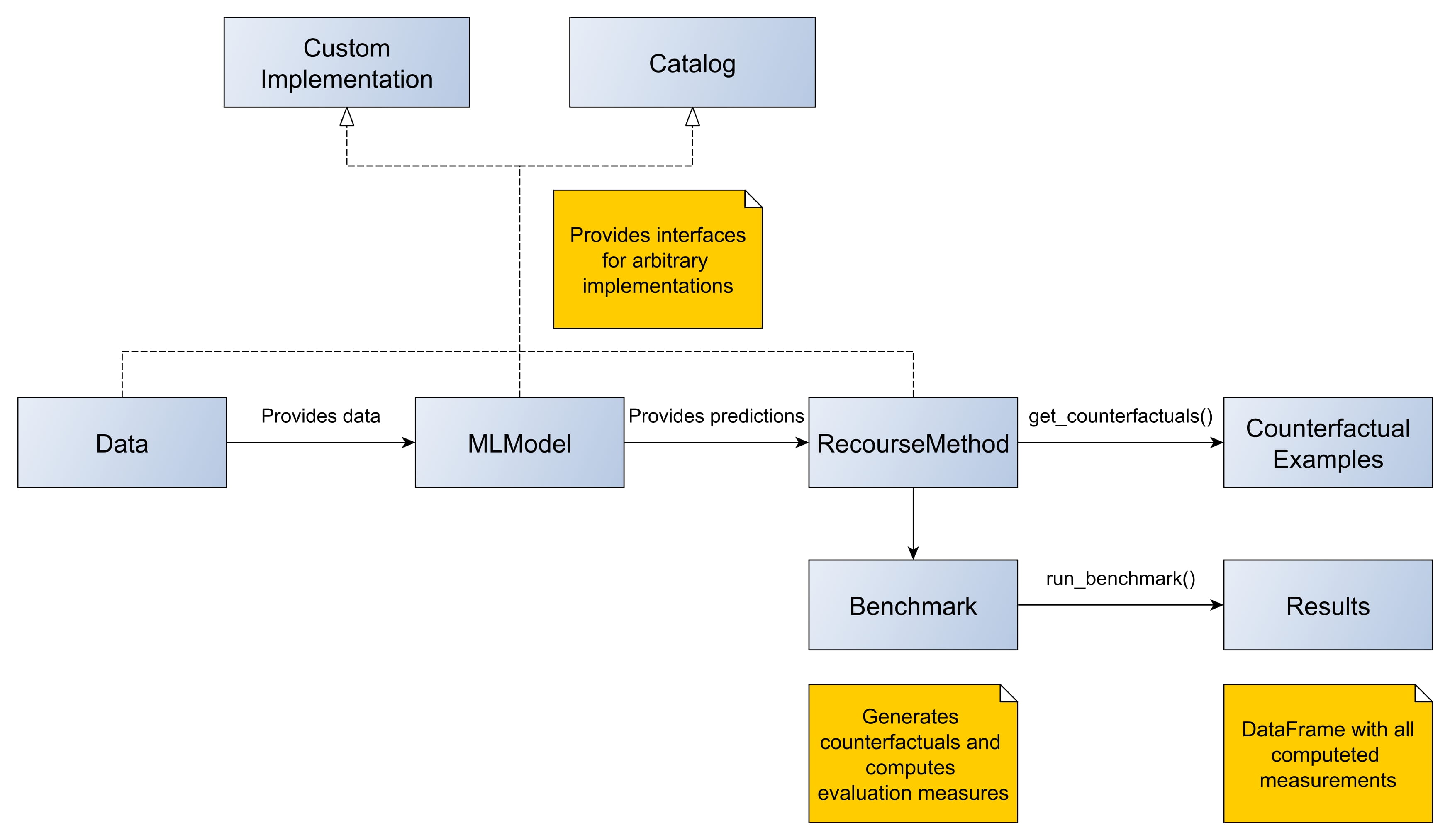

In the above image the architecture of the CARLA python library is shown. The silver boxes show the individual objects that will be created to generate counterfactual explanations and evaluate recourse methods. Useful explanations to specific processes are illustrated as yellow notes. The dashed arrows are showing the different implementation possibilities; either use pre-defined catalog objects or provide custom implementation. All dependencies between these objects are visualised by solid arrows with an additional description.

Available Datasets¶

Implemented Counterfactual Methods¶

Actionable Recourse (AR): AR

Causal recourse: Causal

CCHVAE: CCHVAE

Contrastive Explanations Method (CEM): CEM

Counterfactual Latent Uncertainty Explanations (CLUE): CLUE

CRUDS: CRUDS

Diverse Counterfactual Explanations (DiCE): DiCE

Feasible and Actionable Counterfactual Explanations (FACE): FACE

FeatureTweak: FeatureTweak

FOCUS: FOCUS

Growing Sphere (GS): GS

Revise: Revise

Wachter: Wachter

Provided Machine Learning Models¶

ANN: Artificial Neural Network with 2 hidden layers and ReLU activation function.

LR: Linear Model with no hidden layer and no activation function.

Forest: Tree Ensemble Model.

Which Recourse Methods work with which ML framework?¶

The framework a counterfactual method currently works with is dependent on its underlying implementation. It is planned to make all recourse methods available for all ML frameworks. The latest state can be found here:

Recourse Method |

Tensorflow |

Pytorch |

Sklearn |

XGBoost |

|---|---|---|---|---|

AR |

X |

X |

||

Causal |

X |

X |

||

CCHVAE |

X |

|||

CEM |

X |

|||

CLUE |

X |

|||

CRUDS |

X |

|||

DiCE |

X |

X |

||

FACE |

X |

X |

||

FeatureTweak |

X |

X |

||

FOCUS |

X |

X |

||

Growing Spheres |

X |

X |

||

Revise |

X |

|||

Wachter |

X |

Citation¶

This project was recently accepted to NeurIPS 2021 (Benchmark & Data Sets Track).

If you use this codebase, please cite:

@misc{pawelczyk2021carla,

title={CARLA: A Python Library to Benchmark Algorithmic Recourse and Counterfactual Explanation Algorithms},

author={Martin Pawelczyk and Sascha Bielawski and Johannes van den Heuvel and Tobias Richter and Gjergji Kasneci},

year={2021},

eprint={2108.00783},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Please also cite the original authors’ work.